Is RAG dead, again? In the wake of Llama 4’s jaw-dropping 10-million token context window, the phrase "RAG IS DEAD" is once more taking over the internet.

But here’s the twist: RAG isn’t just alive—it’s thriving. While long context models grab headlines, RAG’s ability to filter data, stay real-time, and adapt to business needs proves its staying power. A combination of both approaches may even emerge as the best outcome achieving better efficiency and accuracy.

At its core, Retrieval-Augmented Generation (RAG) is a method that combines large language models (LLMs) with external knowledge bases for generating responses. Unlike traditional models that rely solely on their internalized training data, RAG retrieves relevant, up-to-date, and specific pieces of information to refine its output. Think of RAG as a highly organized librarian who looks for the exact book or page you need before providing an answer.

Key benefits of RAG include dynamic knowledge updates, precise control over data used for responses, and computational efficiency by focusing processing power only on data that’s needed.

Long context windows, such as the groundbreaking 10-million token capacity of Meta’s Llama 4, allow models to process enormous amounts of text in a single task. This translates to handling roughly 5,000-7,000 pages of text at once—an unmatched capacity for contextual understanding. Long context windows allow models to process and reason across extensive context, enabling comprehensive analysis and understanding of extensive information.

For fields like creative writing or extended conversations, long context windows deliver nuanced and detailed outputs. These strengths make long context windows a remarkable development in the evolution of LLMs.

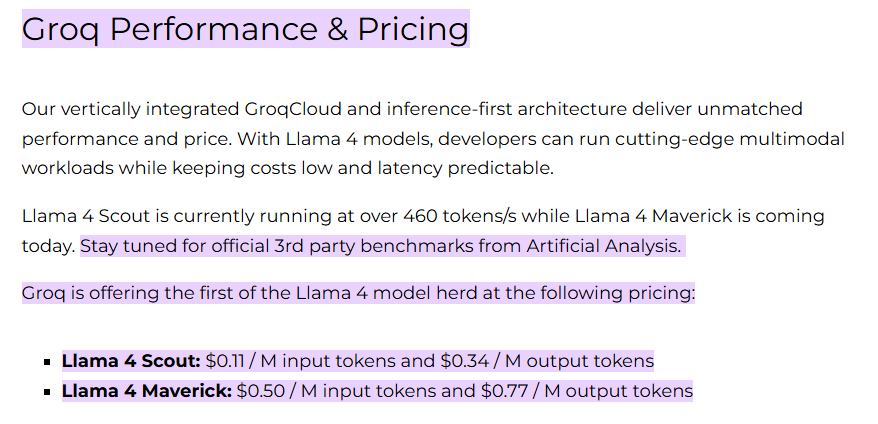

While long context windows sound like the ultimate solution to information processing, they come with hefty computational and financial costs. For example, running queries at full capacity on Llama 4 can cost well over $1 per task, before considering the costs of output tokens. Additionally, tasks requiring processing of maximum context can run into logistical inefficiencies, with slower response times and higher energy demands.

This makes long context windows impractical for use cases that involve frequent queries, such as in the case of an AI customer support chatbot, and indeed most business use cases.

There's a fundamental mathematical challenge with extremely long contexts: attention dilution. In transformer-based models, as the context length grows, each token's attention is distributed across a larger pool of tokens, making it harder for the model to focus on the most relevant information. Researchers often refer to this as the "needle in a haystack" problem. For example, if you input 10 million tokens, the model must identify a handful of relevant tokens among millions of irrelevant ones.

Performance issues in large language models typically emerge beyond 32,000 tokens, meaning that while these models have extremely long context windows, their accuracy and relevance can suffer with longer contexts. This makes them less than ideal in scenarios where precision is crucial.

RAG architectures continue to excel in handling real-time data and updates. In use cases requiring frequent fresh of data or information, it will become incredibly expensive to feed the entire data into the context window repeatedly. In these use cases, such as applications related to financial market data, RAG will likely remain the more reliable and scalable approach.

RAG also excels in offering a level of traceability and clarity that traditional LLMs or even systems with long context windows often lack. When a response is generated, the RAG system can link it back to the specific source of information, ensuring reliability and accountability. It also gives users a higher degree of control over what data the model can access or omit. This transparency is vital for regulated industries like legal or healthcare, where the source of a claim must be verifiable.

By pre-filtering large datasets and retrieving only the most relevant segments of information, RAG systems significantly lower costs and increase operational speed. Instead of running computations over millions of irrelevant tokens, RAG narrows the focus to only what’s necessary, allowing small and medium-sized businesses to operate with high efficiency at manageable costs.

In summary, long-context LLMs and RAG each shine in different areas. Understanding which method is best suited for your specific use case is key to getting the most out of LLMs - without breaking the bank.

Long context windows and RAG are not mutually exclusive. Indeed, the rise of long context windows give rise to a future that strategically combines both methods for enhanced results.

A hybrid approach combining RAG with long context models presents an ideal solution for many queries. Imagine a tiered system: the first tier uses the model’s core parametric knowledge; the second tier involves RAG retrieving small batches of data as needed; and the third tier employs long context windows for comprehensive, nuanced tasks requiring deeper information synthesis. By layering these tools, hybrid systems can balance speed, accuracy, and cost-effectiveness.

RAG and long context windows address different types of challenges, and together, they can solve problems more efficiently than either could alone. For instance, RAG excels in delivering pinpoint-specific information for technical questions, while long context models shine in analyzing sprawling, interconnected datasets. In scenarios like medical diagnosis or financial analysis, RAG can retrieve current, evidence-based insights, and long context models can synthesize these insights into broad, actionable recommendations.

This hybrid approach is already proving valuable in industries requiring both precision and scale. In finance, RAG retrieves real-time market data, while long context windows help analyze trends over the last decade. In healthcare, RAG ensures interventions are based on the latest research, while long-context AI synthesizes patient history and scientific data for comprehensive care plans. Similarly, legal firms use RAG for retrieving individualized cases while leveraging extended context models to draft detailed briefs or track legal precedents.

In conclusion, while advancements like Llama 4’s 10M token context window are impressive, they do not signal the end of RAG. Instead, these technologies complement each other beautifully. RAG shines in areas requiring precise data filtering, real-time updates, and computational efficiency, while long context windows excel in handling expansive data for detailed, creative tasks. Together, they offer unparalleled versatility and power for AI solutions.

Looking ahead, hybrid models combining RAG with long context windows could redefine efficiency and innovation in AI applications.